Same data, different analysts: variation in effect sizes due to analytical decisions in ecology and evolutionary biology.

Although variation in effect sizes and predicted values among studies of similar phenomena is inevitable, such variation far exceeds what might be produced by sampling error alone. One possible explanation for variation among results is differences among researchers in the decisions they make regarding statistical analyses. A growing array of studies has explored this analytical variability in different fields and has found substantial variability among results despite analysts having the same data and research question. Many of these studies have been in the social sciences, but one small ‘many analyst’ study found similar variability in ecology. We expanded the scope of this prior work by implementing a large-scale empirical exploration of the variation in effect sizes and model predictions generated by the analytical decisions of different researchers in ecology and evolutionary biology. We used two unpublished datasets, one from evolutionary ecology (blue tit, Cyanistes caeruleus, to compare sibling number and nestling growth) and one from conservation ecology (Eucalyptus, to compare grass cover and tree seedling recruitment), and the project leaders recruited 174 analyst teams, comprising 246 analysts, to investigate the answers to prespecified research questions. Analyses conducted by these teams yielded 141 usable effects (compatible with our meta-analyses and all necessary information provided) for the blue tit dataset, and 85 usable effects for the Eucalyptus dataset. We found substantial heterogeneity among results for both datasets, although the patterns of variation differed between them. For the blue tit analyses, the average effect was convincingly negative, with less growth for nestlings living with more siblings, but there was near continuous variation in effect size from large negative effects to effects near zero, and even effects crossing the traditional threshold of statistical significance in the opposite direction. In contrast, the average relationship between grass cover and Eucalyptus seedling number was only slightly negative and not convincingly different from zero, and most effects ranged from weakly negative to weakly positive, with about a third of effects crossing the traditional threshold of significance in one direction or the other. However, there were also several striking outliers in the Eucalyptus dataset, with effects far from zero. For both datasets, we found substantial variation in the variable selection and random effects structures among analyses, as well as in the ratings of the analytical methods by peer reviewers, but we found no strong relationship between any of these and deviation from the meta-analytic mean. In other words, analyses with results that were far from the mean were no more or less likely to have dissimilar variable sets, use random effects in their models, or receive poor peer reviews than those analyses that found results that were close to the mean. The existence of substantial variability among analysis outcomes raises important questions about how ecologists and evolutionary biologists should interpret published results, and how they should conduct analyses in the future.

1 Introduction

One value of science derives from its production of replicable, and thus reliable, results. When we repeat a study using the original methods we should be able to expect a similar result. However, perfect replicability is not a reasonable goal. Effect sizes will vary, and even reverse in sign, by chance alone (Gelman and Weakliem 2009). Observed patterns can differ for other reasons as well. It could be that we do not sufficiently understand the conditions that led to the original result so when we seek to replicate it, the conditions differ due to some ‘hidden moderator’. This hidden moderator hypothesis is described by meta-analysts in ecology and evolutionary biology as ‘true biological heterogeneity’ (Senior et al. 2016). This idea of true heterogeneity is popular in ecology and evolutionary biology, and there are good reasons to expect it in the complex systems in which we work (Shavit and Ellison 2017). However, despite similar expectations in psychology, recent evidence in that discipline contradicts the hypothesis that moderators are common obstacles to replicability, as variability in results in a large ‘many labs’ collaboration was mostly unrelated to commonly hypothesized moderators such as the conditions under which the studies were administered (Klein et al. 2018). Another possible explanation for variation in effect sizes is that researchers often present biased samples of results, thus reducing the likelihood that later studies will produce similar effect sizes (Open Science Collaboration 2015; Parker et al. 2016; Forstmeier, Wagenmakers, and Parker 2017; Fraser et al. 2018; Parker and Yang 2023). It also may be that although researchers did successfully replicate the conditions, the experiment, and measured variables, analytical decisions differed sufficiently among studies to create divergent results (Simonsohn, Simmons, and Nelson 2015; Silberzahn et al. 2018).

Analytical decisions vary among studies because researchers have many options. Researchers need to decide how to exclude possibly anomalous or unreliable data, how to construct variables, which variables to include in their models, and which statistical methods to use. Depending on the dataset, this short list of choices could encompass thousands or millions of possible alternative specifications (Simonsohn, Simmons, and Nelson 2015). However, researchers making these decisions presumably do so with the goal of doing the best possible analysis, or at least the best analysis within their current skill set. Thus it seems likely that some specification options are more probable than others, possibly because they have previously been shown (or claimed) to be better, or because they are more well known. Of course, some of these different analyses (maybe many of them) may be equally valid alternatives. Regardless, on probably any topic in ecology and evolutionary biology, we can encounter differences in choices of data analysis. The extent of these differences in analyses and the degree to which these differences influence the outcomes of analyses and therefore studies’ conclusions are important empirical questions. These questions are especially important given that many papers draw conclusions after applying a single method, or even a single statistical model, to analyze a dataset.

The possibility that different analytical choices could lead to different outcomes has long been recognized (Gelman and Loken 2013), and various efforts to address this possibility have been pursued in the literature. For instance, one common method in ecology and evolutionary biology involves creating a set of candidate models, each consisting of a different (though often similar) set of predictor variables, and then, for the predictor variable of interest, averaging the slope across all models (i.e. model averaging) (Burnham and Anderson 2002; Grueber et al. 2011). This method reduces the chance that a conclusion is contingent upon a single model specification, though use and interpretation of this method is not without challenges (Grueber et al. 2011). Further, the models compared to each other typically differ only in the inclusion or exclusion of certain predictor variables and not in other important ways, such as methods of parameter estimation. More explicit examination of outcomes of differences in model structure, model type, data exclusion, or other analytical choices can be implemented through sensitivity analyses (e.g., Noble et al. 2017). Sensitivity analyses, however, are typically rather narrow in scope, and are designed to assess the sensitivity of analytical outcomes to a particular analytical choice rather than to a large universe of choices. Recently, however, analysts in the social sciences have proposed extremely thorough sensitivity analysis, including ‘multiverse analysis’ (Steegen et al. 2016) and the ‘specification curve’ (Simonsohn, Simmons, and Nelson 2015), as a means of increasing the reliability of results. With these methods, researchers identify relevant decision points encountered during analysis and conduct the analysis many times to incorporate many plausible decisions made at each of these points. The study’s conclusions are then based on a broad set of the possible analyses and so allow the analyst to distinguish between robust conclusions and those that are highly contingent on particular model specifications. These are useful outcomes, but specifying a universe of possible modelling decisions is not a trivial undertaking. Further, the analyst’s knowledge and biases will influence decisions about the boundaries of that universe, and so there will always be room for disagreement among analysts about what to include. Including more specifications is not necessarily better. Some analytical decisions are better justified than others, and including biologically implausible specifications may undermine this process. Regardless, these powerful methods have yet to be adopted, and even more limited forms of sensitivity analyses are not particularly widespread. Most studies publish a small set of analyses and so the existing literature does not provide much insight into the degree to which published results are contingent on analytical decisions.

Despite the potential major impacts of analytical decisions on variance in results, the outcomes of different individuals’ data analysis choices have only recently begun to receive much empirical attention. The only formal exploration of this that we were aware of when we submitted our Stage 1 manuscript were (1) an analysis in social science that asked whether male professional football (soccer) players with darker skin tone were more likely to be issued red cards (ejection from the game for rule violation) than players with lighter skin tone (Silberzahn et al. 2018) and (2) an analysis in neuroimaging which evaluated nine separate hypotheses involving the neurological responses detected with fMRI in 108 participants divided between two treatments in a decision making task (Botvinik-Nezer et al. 2020). Several others have been published since (e.g., Huntington-Klein et al. 2021; Schweinsberg et al. 2021; Breznau et al. 2022; Coretta et al. 2023), and we recently learned of an earlier small study in ecology (Stanton-Geddes, de Freitas, and de Sales 2014). In the red card study, 29 teams designed and implemented analyses of a dataset provided by the study coordinators (Silberzahn et al. 2018). Analyses were peer reviewed (results blind) by at least two other participating analysts; a level of scrutiny consistent with standard pre-publication peer review. Among the final 29 analyses, odds-ratios varied from 0.89 to 2.93, meaning point estimates varied from having players with lighter skin tones receive more red cards (odds ratio < 1) to a strong effect of players with darker skin tones receiving more red cards (odds ratio > 1). Twenty of the 29 teams found a statistically-significant effect in the predicted direction of players with darker skin tones being issued more red cards. This degree of variation in peer-reviewed analyses from identical data is striking, but the generality of this finding has only just begun to be formally investigated (e.g., Huntington-Klein et al. 2021; Schweinsberg et al. 2021; Breznau et al. 2022; Coretta et al. 2023).

In the neuroimaging study, 70 teams evaluated each of the nine different hypotheses with the available fMRI data (Botvinik-Nezer et al. 2020). These 70 teams followed a divergent set of workflows that produced a wide range of results. The rate of reporting of statistically significant support for the nine hypotheses ranged from 21\(\%\)to 84\(\%\), and for each hypothesis on average, 20\(\%\) of research teams observed effects that differed substantially from the majority of other teams. Some of the variability in results among studies could be explained by analytical decisions such as choice of software package, smoothing function, and parametric versus non-parametric corrections for multiple comparisons. However, substantial variability among analyses remained unexplained, and presumably emerged from the many different decisions each analyst made in their long workflows. Such variability in results among analyses from this dataset and from the very different red-card dataset suggests that sensitivity of analytical outcome to analytical choices may characterize many distinct fields, as several more recent many-analyst studies also suggest (Huntington-Klein et al. 2021; Schweinsberg et al. 2021; Breznau et al. 2022).

To further develop the empirical understanding of the effects of analytical decisions on study outcomes, we chose to estimate the extent to which researchers’ data analysis choices drive differences in effect sizes, model predictions, and qualitative conclusions in ecology and evolutionary biology. This is an important extension of the meta-research agenda of evaluating factors influencing replicability in ecology, evolutionary biology, and beyond (Fidler et al. 2017). To examine the effects of analytical decisions, we used two different datasets and recruited researchers to analyze one or the other of these datasets to answer a question we defined. The first question was “To what extent is the growth of nestling blue tits (Cyanistes caeruleus) influenced by competition with siblings?” To answer this question, we provided a dataset that includes brood size manipulations from 332 broods conducted over three years at Wytham Wood, UK. The second question was “How does grass cover influence Eucalyptus spp. seedling recruitment?” For this question, analysts used a dataset that includes, among other variables, number of seedlings in different size classes, percentage cover of different life forms, tree canopy cover, and distance from canopy edge from 351 quadrats spread among 18 sites in Victoria, Australia.

We explored the impacts of data analysts’ choices with descriptive statistics and with a series of tests to attempt to explain the variation among effect sizes and predicted values of the dependent variable produced by the different analysis teams for both datasets separately. To describe the variability, we present forest plots of the standardized effect sizes and predicted values produced by each of the analysis teams, estimate heterogeneity (both absolute, \(\tau^2\), and proportional, \(I^2\)) in effect size and predicted values among the results produced by these different teams, and calculate a similarity index that quantifies variability among the predictor variables selected for the different statistical models constructed by the different analysis teams. These descriptive statistics provide the first estimates of the extent to which explanatory statistical models and their outcomes in ecology and evolutionary biology vary based on the decisions of different data analysts. We then quantified the degree to which the variability in effect size and predicted values could be explained by (1) variation in the quality of analyses as rated by peer reviewers and (2) the similarity of the choices of predictor variables between individual analyses.

2 Methods

This project involved a series of steps (1-6) that began with identifying datasets for analyses and continued through recruiting independent groups of scientists to analyze the data, allowing the scientists to analyze the data as they saw fit, generating peer review ratings of the analyses (based on methods, not results), evaluating the variation in effects among the different analyses, and producing the final manuscript.

2.1 Step 1: Select Datasets

We used two previously unpublished datasets, one from evolutionary ecology and the other from ecology and conservation.

Evolutionary ecology

Our evolutionary ecology dataset is relevant to a sub-discipline of life-history research which focuses on identifying costs and trade-offs associated with different phenotypic conditions. These data were derived from a brood-size manipulation experiment imposed on wild birds nesting in boxes provided by researchers in an intensively studied population. Understanding how the growth of nestlings is influenced by the numbers of siblings in the nest can give researchers insights into factors such as the evolution of clutch size, determination of provisioning rates by parents, and optimal levels of sibling competition (Vander Werf 1992; DeKogel 1997; Royle et al. 1999; Verhulst, Holveck, and Riebel 2006; Nicolaus et al. 2009). Data analysts were provided this dataset and instructed to answer the following question: “To what extent is the growth of nestling blue tits (Cyanistes caeruleus) influenced by competition with siblings?”

Researchers conducted brood size manipulations and population monitoring of blue tits at Wytham Wood, a 380 ha woodland in Oxfordshire, U.K (1º 20’W, 51º 47’N). Researchers regularly checked approximately 1100 artificial nest boxes at the site and monitored the 330 to 450 blue tit pairs occupying those boxes in 2001-2003 during the experiment. Nearly all birds made only one breeding attempt during the April to June study period in a given year. At each blue tit nest, researchers recorded the date the first egg appeared, clutch size, and hatching date. For all chicks alive at age 14 days, researchers measured mass and tarsus length and fitted a uniquely numbered, British Trust for Ornithology (BTO) aluminium leg ring. Researchers attempted to capture all adults at their nests between day 6 and day 14 of the chick-rearing period. For these captured adults, researchers measured mass, tarsus length, and wing length and fitted a uniquely numbered BTO leg ring. During the 2001-2003 breeding seasons, researchers manipulated brood sizes using cross fostering. They matched broods for hatching date and brood size and moved chicks between these paired nests one or two days after hatching. They sought to either enlarge or reduce all manipulated broods by approximately one fourth. To control for effects of being moved, each reduced brood had a portion of its brood replaced by chicks from the paired increased brood, and vice versa. Net manipulations varied from plus or minus four chicks in broods of 12 to 16 to plus or minus one chick in broods of 4 or 5. Researchers left approximately one third of all broods unmanipulated. These unmanipulated broods were not selected systematically to match manipulated broods in clutch size or laying date. We have mass and tarsus length data from 3720 individual chicks divided among 167 experimentally enlarged broods, 165 experimentally reduced broods, and 120 unmanipulated broods. The full list of variables included in the dataset is publicly available (https://osf.io/hdv8m), along with the data (https://osf.io/qjzby).

Shortly after beginning to recruit analysts, several analysts noted a small set of related errors in the blue tit dataset. We corrected the errors, replaced the dataset on our OSF site, and emailed the analysts on 19 April 2020 to instruct them to use the revised data. The email to analysts is available here (https://osf.io/4h53z). The errors are explained in that email.

Ecology and conservation

Our ecology and conservation dataset is relevant to a sub-discipline of conservation research which focuses on investigating how best to revegetate private land in agricultural landscapes. These data were collected on private land under the Bush Returns program, an incentive system where participants entered into a contract with the Goulburn Broken Catchment Management Authority and received annual payments if they executed predetermined restoration activities. This particular dataset is based on a passive regeneration initiative, where livestock grazing was removed from the property in the hopes that the Eucalyptus spp. overstorey would regenerate without active (and expensive) planting. Analyses of some related data have been published (Miles 2008; Vesk et al. 2016) but those analyses do not address the question analysts answered in our study. Data analysts were provided this dataset and instructed to answer the following question: “How does grass cover influence Eucalyptus spp. seedling recruitment?”.

Researchers conducted three rounds of surveys at 18 sites across the Goulburn Broken catchment in northern Victoria, Australia in winter and spring 2006 and autumn 2007. In each survey period, a different set of 15 x 15 m quadrats were randomly allocated across each site within 60 m of existing tree canopies. The number of quadrats at each site depended on the size of the site, ranging from four at smaller sites to 11 at larger sites. The total number of quadrats surveyed across all sites and seasons was 351. The number of Eucalyptus spp. seedlings was recorded in each quadrat along with information on the GPS location, aspect, tree canopy cover, distance to tree canopy, and position in the landscape. Ground layer plant species composition was recorded in three 0.5 x 0.5 m sub-quadrats within each quadrat. Subjective cover estimates of each species as well as bare ground, litter, rock and moss/lichen/soil crusts were recorded. Subsequently, this was augmented with information about the precipitation and solar radiation at each GPS location. The full list of variables included in the dataset is publicly available (https://osf.io/r5gbn), along with the data (https://osf.io/qz5cu).

2.2 Step 2: Recruitment and Initial Survey of Analysts

The lead team (TP, HF, SN, EG, SG, PV, DH, FF) created a publicly available document providing a general description of the project (https://osf.io/mn5aj/). The project was advertised at conferences, via Twitter, using mailing lists for ecological societies (including Ecolog, Evoldir, and lists for the Environmental Decisions Group, and Transparency in Ecology and Evolution), and via word of mouth. The target population was active ecology, conservation, or evolutionary biology researchers with a graduate degree (or currently studying for a graduate degree) in a relevant discipline. Researchers could choose to work independently or in a small team. For the sake of simplicity, we refer to these as ‘analysis teams’ though some comprised one individual. We aimed for a minimum of 12 analysis teams independently evaluating each dataset (see sample size justification below). We simultaneously recruited volunteers to peer review the analyses conducted by the other volunteers through the same channels. Our goal was to recruit a similar number of peer reviewers and analysts, and to ask each peer reviewer to review a minimum of four analyses. If we were unable to recruit at least half the number of reviewers as analysis teams, we planned to ask analysts to serve also as reviewers (after they had completed their analyses), but this was unnecessary. Therefore, no data analysts peer reviewed analyses of the dataset they had analyzed. All analysts and reviewers were offered the opportunity to share co-authorship on this manuscript and we planned to invite them to participate in the collaborative process of producing the final manuscript. All analysts signed [digitally] a consent (ethics) document (https://osf.io/xyp68/) approved by the Whitman College Institutional Review Board prior to being allowed to participate.

Due to the large number of recruited analysts and reviewers and the anticipated challenges of receiving and integrating feedback from so many authors, we limited analyst and reviewer participation in the production of the final manuscript to an invitation to call attention to serious problems with the manuscript draft.

We identified our minimum number of analysts per dataset by considering the number of effects needed in a meta-analysis to generate an estimate of heterogeneity (\(\tau^{2}\)) with a 95\(\%\)confidence interval that does not encompass zero. This minimum sample size is invariant regardless of \(\tau^{2}\). This is because the same t-statistic value will be obtained by the same sample size regardless of variance (\(\tau^{2}\)). We see this by first examining the formula for the standard error, \(\text{SE}\) for variance, (\(\tau^{2}\)) or (\(\text{SE}\tau^{2}\)) assuming normality in an underlying distribution of effect sizes (Knight 2000):

\[ \text{SE}({{\tau}^2})=\sqrt{\frac{{2\tau}^4}{n-1}} \tag{2.1}\]

and then rearranging the above formula to show how the t-statistic is independent of \(\tau^2\), as seen below.

\[ t=\frac{{\tau}^2}{SE({{\tau}^2})}=\sqrt{\frac{n-1}{2}} \tag{2.2}\]

We then find a minimum n = 12 according to this formula.

2.3 Step 3: Primary Data Analyses

Analysis teams registered and answered a demographic and expertise survey (https://osf.io/seqzy/). We then provided them with the dataset of their choice and requested that they answer a specific research question. For the evolutionary ecology dataset that question was “To what extent is the growth of nestling blue tits (Cyanistes caeruleus) influenced by competition with siblings?” and for the conservation ecology dataset it was “How does grass cover influence Eucalyptus spp. seedling recruitment?” Once their analysis was complete, they answered a structured survey (https://osf.io/neyc7/), providing analysis technique, explanations of their analytical choices, quantitative results, and a statement describing their conclusions. They also were asked to upload their analysis files (including the dataset as they formatted it for analysis and their analysis code [if applicable]) and a detailed journal-ready statistical methods section.

As is common in many studies in ecology and evolutionary biology, the datasets we provided contained many variables, and the research questions we provided could be addressed by our datasets in many different ways. For instance, volunteer analysts had to choose the dependent (response) variable and the independent variable, and make numerous other decisions about which variables and data to use and how to structure their model.

We originally planned to have analysts complete a single survey (https://osf.io/neyc7/), but after we evaluated the results of that survey, we realized we would need a second survey (https://osf.io/8w3v5/) to adequately collect the information we needed to evaluate heterogeneity of results (step 5). We provided a set of detailed instructions with the follow-up survey, and these instructions are publicly available and can be found within the following files (blue tit: https://osf.io/kr2g9, Eucalyptus: https://osf.io/dfvym).

2.4 Step 4: Peer Reviews of Analyses

At minimum, each analysis was evaluated by four different reviewers, and each volunteer peer reviewer was randomly assigned methods sections from at least four analyst teams (the exact number varied). Each peer reviewer registered and answered a demographic and expertise survey identical to that asked of the analysts, except we did not ask about ‘team name’ since reviewers did not work in teams. Reviewers evaluated the methods of each of their assigned analyses one at a time in a sequence determined by the project leaders. We systematically assigned the sequence so that, if possible, each analysis was allocated to each position in the sequence for at least one reviewer. For instance, if each reviewer were assigned four analyses to review, then each analysis would be the first analysis assigned to at least one reviewer, the second analysis assigned to another reviewer, the third analysis assigned to yet another reviewer, and the fourth analysis assigned to a fourth reviewer. Balancing the order in which reviewers saw the analyses controls for order effects, e.g. a reviewer might be less critical of the first methods section they read than the last.

The process for a single reviewer was as follows. First, the reviewer received a description of the methods of a single analysis. This included the narrative methods section, the analysis team’s answers to our survey questions regarding their methods, including analysis code, and the dataset. The reviewer was then asked, in an online survey (https://osf.io/4t36u/), to rate that analysis on a scale of 0-100 based on this prompt: “Rate the overall appropriateness of this analysis to answer the research question (one of the two research questions inserted here) with the available data. To help you calibrate your rating, please consider the following guidelines:

- A perfect analysis with no conceivable improvements from the reviewer

- An imperfect analysis but the needed changes are unlikely to dramatically alter outcomes

- A flawed analysis likely to produce either an unreliable estimate of the relationship or an over-precise estimate of uncertainty

- A flawed analysis likely to produce an unreliable estimate of the relationship and an over-precise estimate of uncertainty

- A dangerously misleading analysis, certain to produce both an estimate that is wrong and a substantially over-precise estimate of uncertainty that places undue confidence in the incorrect estimate.

*Please note that these values are meant to calibrate your ratings. We welcome ratings of any number between 0 and 100.

After providing this rating, the reviewer was presented with this prompt, in multiple-choice format: “Would the analytical methods presented produce an analysis that is (a) publishable as is, (b) publishable with minor revision, (c) publishable with major revision, (d) deeply flawed and unpublishable?” The reviewer was then provided with a series of text boxes and the following prompts: “Please explain your ratings of this analysis. Please evaluate the choice of statistical analysis type. Please evaluate the process of choosing variables for and structuring the statistical model. Please evaluate the suitability of the variables included in (or excluded from) the statistical model. Please evaluate the suitability of the structure of the statistical model. Please evaluate choices to exclude or not exclude subsets of the data. Please evaluate any choices to transform data (or, if there were no transformations, but you think there should have been, please discuss that choice).” After submitting this review, a methods section from a second analysis was then made available to the reviewer. This same sequence was followed until all analyses allocated to a given reviewer were provided and reviewed. After providing the final review, the reviewer was simultaneously provided with all four (or more) methods sections the reviewer had just completed reviewing, the option to revise their original ratings, and a text box to provide an explanation. The invitation to revise the original ratings was as follows: “If, now that you have seen all the analyses you are reviewing, you wish to revise your ratings of any of these analyses, you may do so now.” The text box was prefaced with this prompt: “Please explain your choice to revise (or not to revise) your ratings.”

To determine how consistent peer reviewers were in their ratings, we assessed inter-rater reliability among reviewers for both the categorical and quantitative ratings combining blue tit and Eucalyptus data using Krippendorff’s alpha for ordinal and continuous data respectively. This provides a value that is between -1 (total disagreement between reviewers) and 1 (total agreement between reviewers).

2.5 Step 5: Evaluate Variation

The lead team conducted the analyses outlined in this section. We described the variation in model specification in several ways. We calculated summary statistics describing variation among analyses, including mean, \(\text{SD}\), and range of number of variables per model included as fixed effects, the number of interaction terms, the number of random effects, and the mean, \(\text{SD}\), and range of sample sizes. We also present the number of analyses in which each variable was included. We summarized the variability in standardized effect sizes and predicted values of dependent variables among the individual analyses using standard random effects meta-analytic techniques. First, we derived standardized effect sizes from each individual analysis. We did this for all linear models or generalized linear models by converting the \(t\) value and the degree of freedom (\(\mathit{df}\)) associated with regression coefficients (e.g. the effect of the number of siblings [predictor] on growth [response] or the effect of grass cover [predictor] on seedling recruitment [response]) to the correlation coefficient, \(r\), using the following:

\[ r=\sqrt{\frac{{t}^2}{\left({{t}^2}+\mathit{df}\right) }} \tag{2.3}\]

This formula can only be applied if \(t\) and \(\mathit{df}\) values originate from linear or generalized linear models [GLMs; Nakagawa and Cuthill (2007)]. If, instead, linear mixed-effects models (LMMs) or generalized linear mixed-effects models (GLMMs) were used by a given analysis, the exact \(\mathit{df}\) cannot be estimated. However, adjusted \(\mathit{df}\) can be estimated, for example, using the Satterthwaite approximation of \(\mathit{df}\), \(\mathit{df}_S\), [note that SAS uses this approximation to obtain \(\mathit{df}\) for LMMs and GLMMs; Luke (2017)]. For analyses using either LMMs or GLMMs that do not produce \(\mathit{df}_S\) we planned to obtain \(\mathit{df}_S\) by rerunning the same (G)LMMs using the lmer() or glmer() function in the lmerTest package in R (Kuznetsova, Brockhoff, and Christensen 2017; R Core Team 2024).

Rather than re-run these analyses ourselves, we sent a follow-up survey (referenced above under “Primary data analyses”) to analysts and asked them to follow our instructions for producing this information. The instructions are publicly available and can be found within the following files (blue tit: https://osf.io/kr2g9, Eucalyptus: https://osf.io/dfvym).

We then used the \(t\) values and \(\mathit{df}_S\) from the models to obtain \(r\) as per the formula above. All \(r\) and accompanying \(\mathit{df}\) (or \(\mathit{df}_S\)) were converted to Fisher’s \(Z_r\)

\[ Z_r = \frac{1}{2} \ln(\dfrac{1+r}{1-r}) \tag{2.4}\]

and its sampling variance; \(1/(n – 3)\) where \(n = df + 1\). Any analyses from which we could not derive a signed \(Z_r\), for instance one with a quadratic function in which the slope changed sign, were considered unusable for analyses of \(Z_r\) . We expected such analyses would be rare. In fact, most submitted analyses excluded from our meta-analysis of \(Z_r\) were excluded because of a lack of sufficient information provided by the analyst team rather than due to the use of effects that could not be converted to \(Z_r\). Regardless, as we describe below, we generated a second set of standardized effects (predicted values) that could (in principle) be derived from any explanatory model produced by these data.

Besides \(Z_r\), which describes the strength of a relationship based on the amount of variation in a dependent variable explained by variation in an independent variable, we also examined differences in the shape of the relationship between the independent and dependent variables. To accomplish this, we derived a point estimate (out-of-sample predicted value) for the dependent variable of interest for each of three values of our primary independent variable. We originally described these three values as associated with the 25th percentile, median, and 75th percentile of the independent variable and any covariates.

The original description of the out-of-sample specifications did not account for the facts that (a) some variables are not distributed in a way that allowed division in percentiles and that (b) variables could be either positively or negatively correlated with the dependent variable. We provide a more thorough description here: We derived three point-estimates (out-of-sample predicted values) for the dependent variable of interest; one for each of three values of our primary independent variable that we specified. We also specified values for all other variables that could have been included as independent variables in analysts’ models so that we could derive the predicted values from a fully specified version of any model produced by analysts. For all potential independent variables, we selected three values or categories. Of the three we selected, one was associated with small, one with intermediate, and one with large values of one typical dependent variable (day 14 chick weight for the blue tit data and total number of seedlings for the Eucalyptus data; analysts could select other variables as their dependent variable, but the others typically correlated with the two identified here). For continuous variables, this means we identified the 25th percentile, median, and 75th percentile and, if the slope of the linear relationship between this variable and the typical dependent variable was positive, we left the quartiles ordered as is. If, instead, the slope was negative, we reversed the order of the independent variable quartiles so that the ‘lower’ quartile value was the one associated with the lower value for the dependent variable. In the case of categorical variables, we identified categories associated with the 25th percentile, median, and 75th percentile values of the typical dependent variable after averaging the values for each category. However, for some continuous and categorical predictors, we also made selections based on the principle of internal consistency between certain related variables, and we fixed a few categorical variables as identical across all three levels where doing so would simplify the modelling process (specification tables available: blue tit: https://osf.io/86akx; Eucalyptus: https://osf.io/jh7g5).

We used the 25th and 75th percentiles rather than minimum and maximum values to reduce the chance of occupying unrealistic parameter space. We planned to derive these predicted values from the model information provided by the individual analysts. All values (predictions) were first transformed to the original scale along with their standard errors (\(\text{SE}\)); we used the delta method (Ver Hoef 2012) for the transformation of \(\text{SE}\). We used the square of the \(\text{SE}\) associated with predicted values as the sampling variance in the meta-analyses described below, and we planned to analyze these predicted values in exactly the same ways as we analyzed \(Z_r\) in the following analyses.

1. Standardizing blue tit out-of-sample predictions \(y_i\)

Because analysts of blue tit data chose different dependent variables on different scales, after transforming out-of-sample values to the original scales, we standardized all values as z scores (‘standard scores’) to put all dependent variables on the same scale and make them comparable. This involved taking each relevant value on the original scale (whether a predicted point estimate or a \(\text{SE}\) associated with that estimate) and subtracting the value in question from the mean value of that dependent variable derived from the full dataset and then dividing this difference by the standard deviation, \(\text{SD}\), corresponding to the mean from the full dataset (Equation B.1). Thus, all our out-of-sample prediction values from the blue tit data are from a distribution with the mean of 0 and \(\text{SD}\) of 1.

Note that we were unable to standardise some analyst-constructed variables, so these analyses were excluded from the final out-of-sample estimates meta-analysis, see Section B.1.2.1 for details and explanation.

2. Log-transforming Eucalyptus out-of-sample predictions \(y_i\)

All analyses of the Eucalyptus data chose dependent variables that were on the same scale, that is, Eucalyptus seedling counts. Although analysts may have used different size-classes of Eucalyptus seedlings for their dependent variable, we considered these choices to be akin to subsetting, rather than as different response variables, since changing the size-class of the dependent variable ultimately results in observations being omitted or included. Consequently, we did not standardise Eucalyptus out-of-sample predictions.

We were unable to fit quasi-Poisson or Poisson meta-regressions, as desired (O’Hara and Kotze 2010), because available meta-analysis packages (e.g. metafor:: and metainc::) do not provide implementation for outcomes as estimates-only, methods are only provided for outcomes as ratios or rate-differences between two groups. Consequently, we log-transformed the out-of-sample predictions for the Eucalyptus data and use the mean estimate for each prediction scenario as the dependent variable in our meta-analysis with the associated \(\text{SE}\) as the sampling variance in the meta-analysis (Nakagawa, Yang, et al. 2023, Table 2).

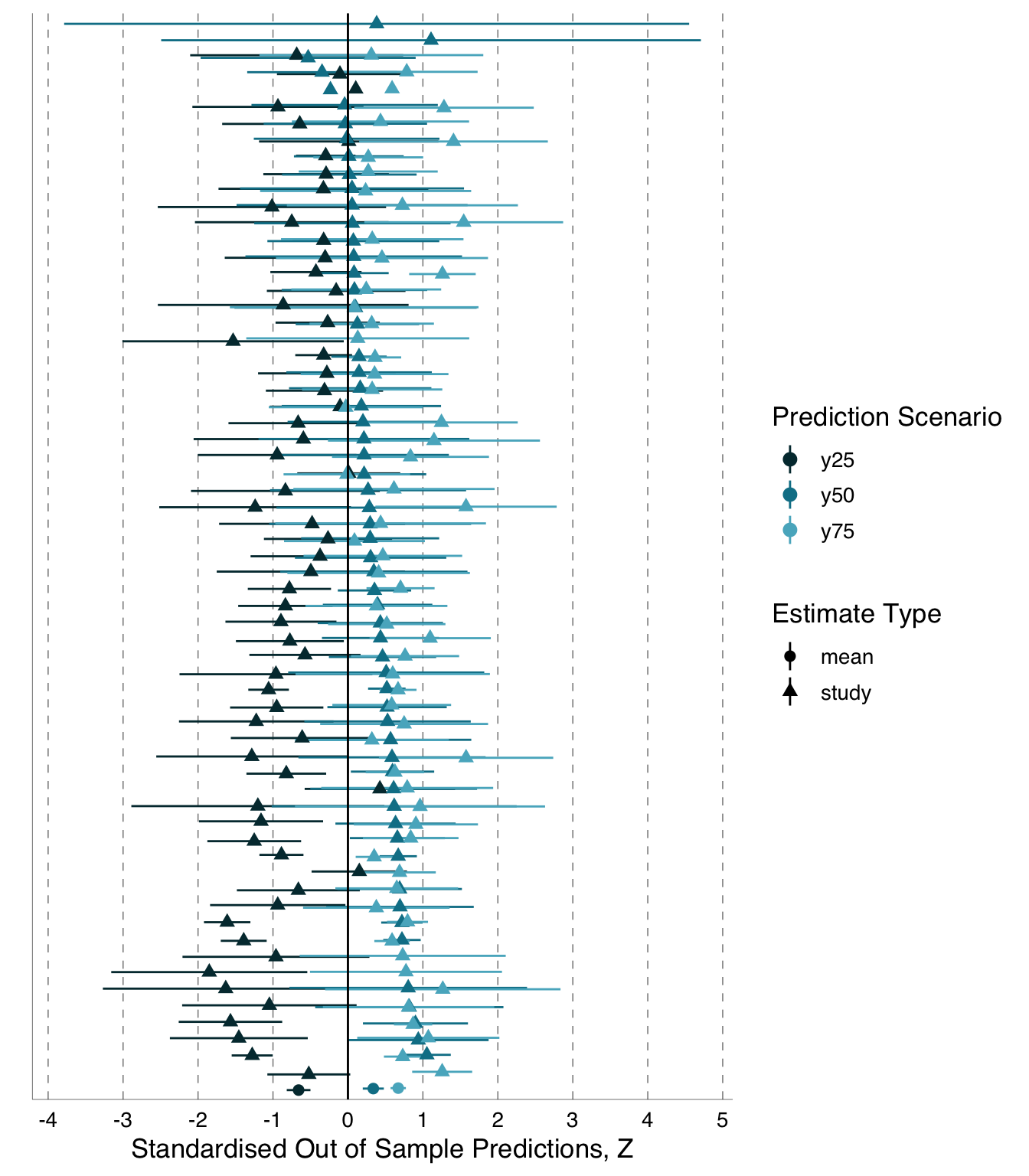

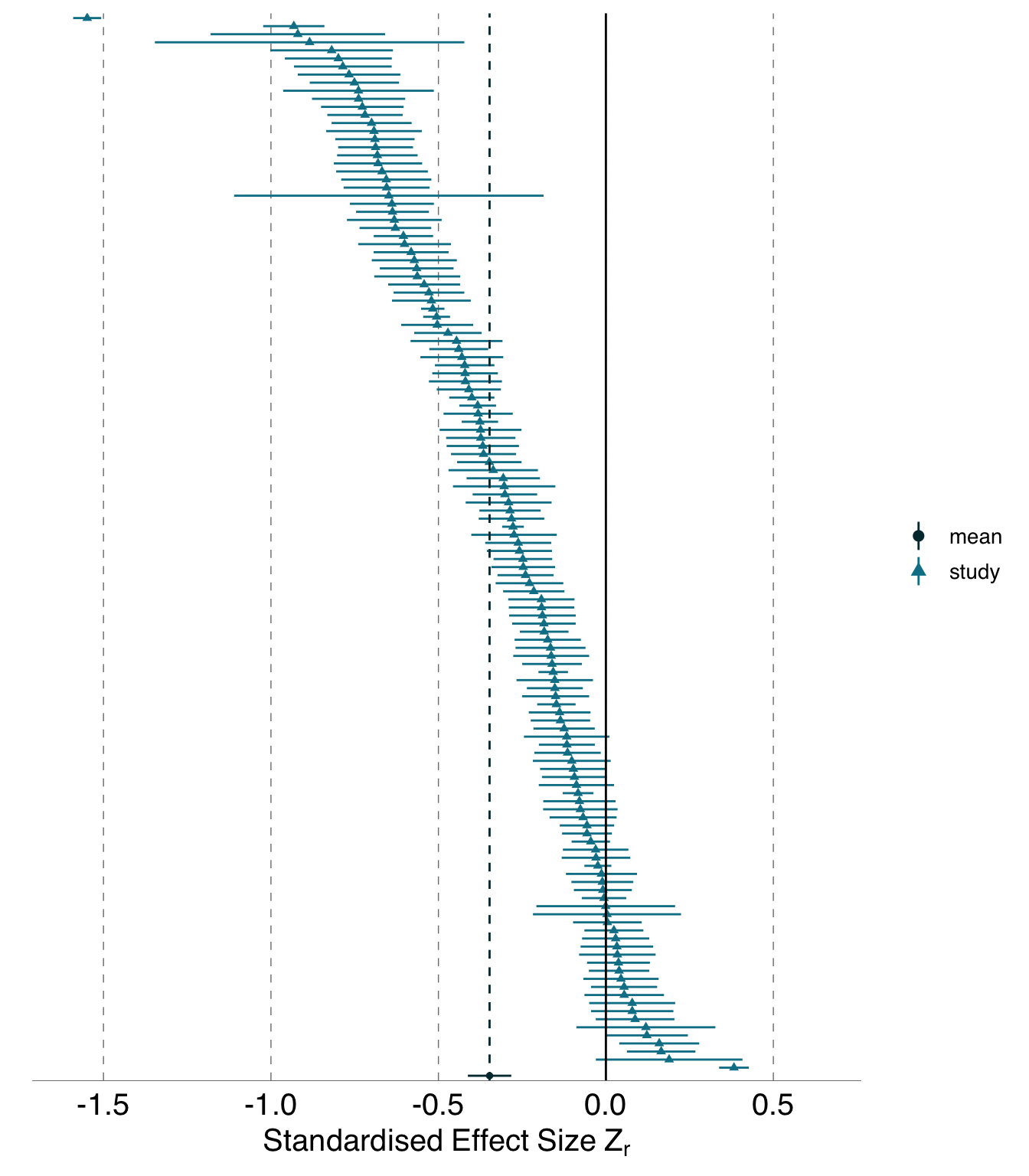

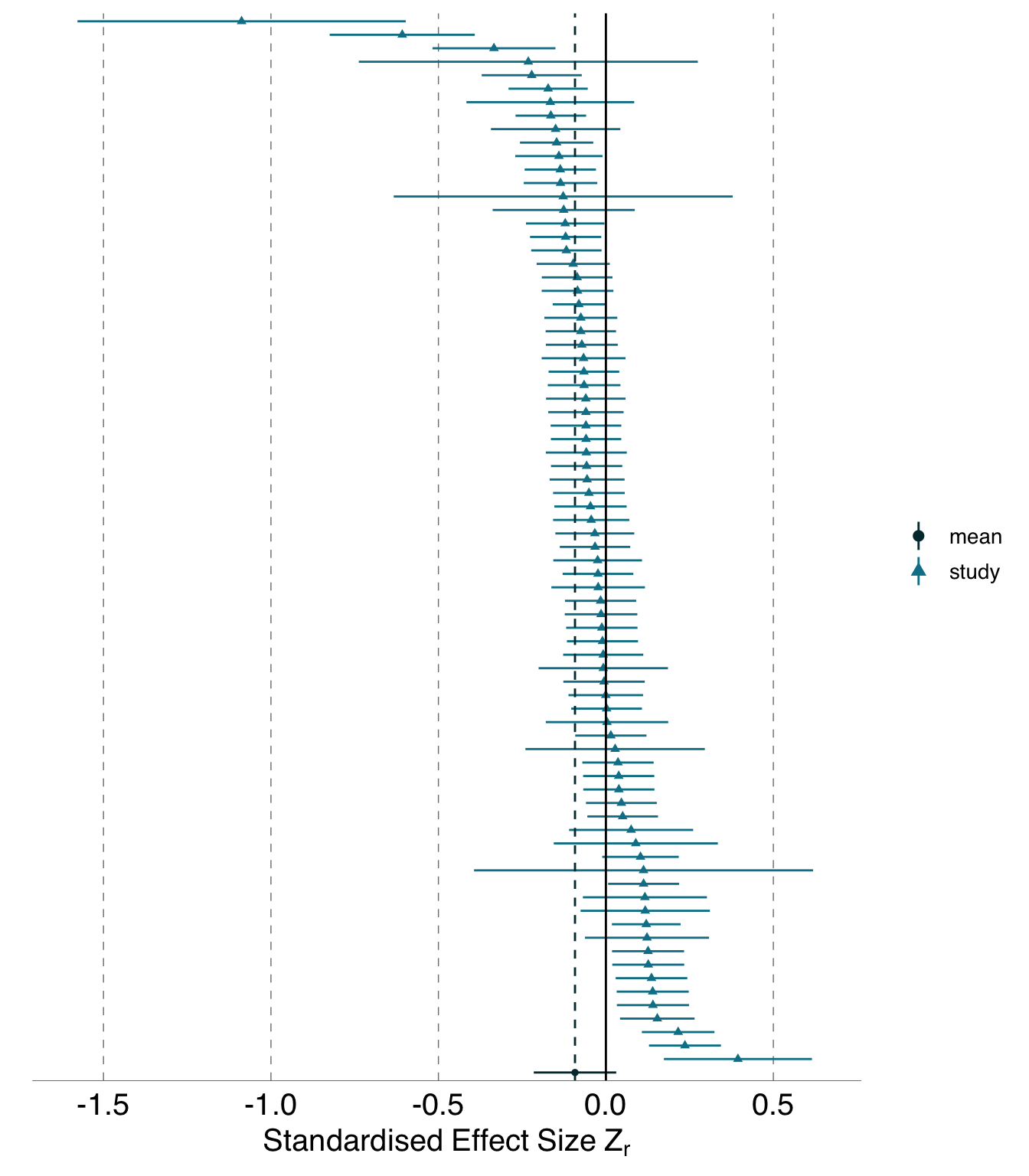

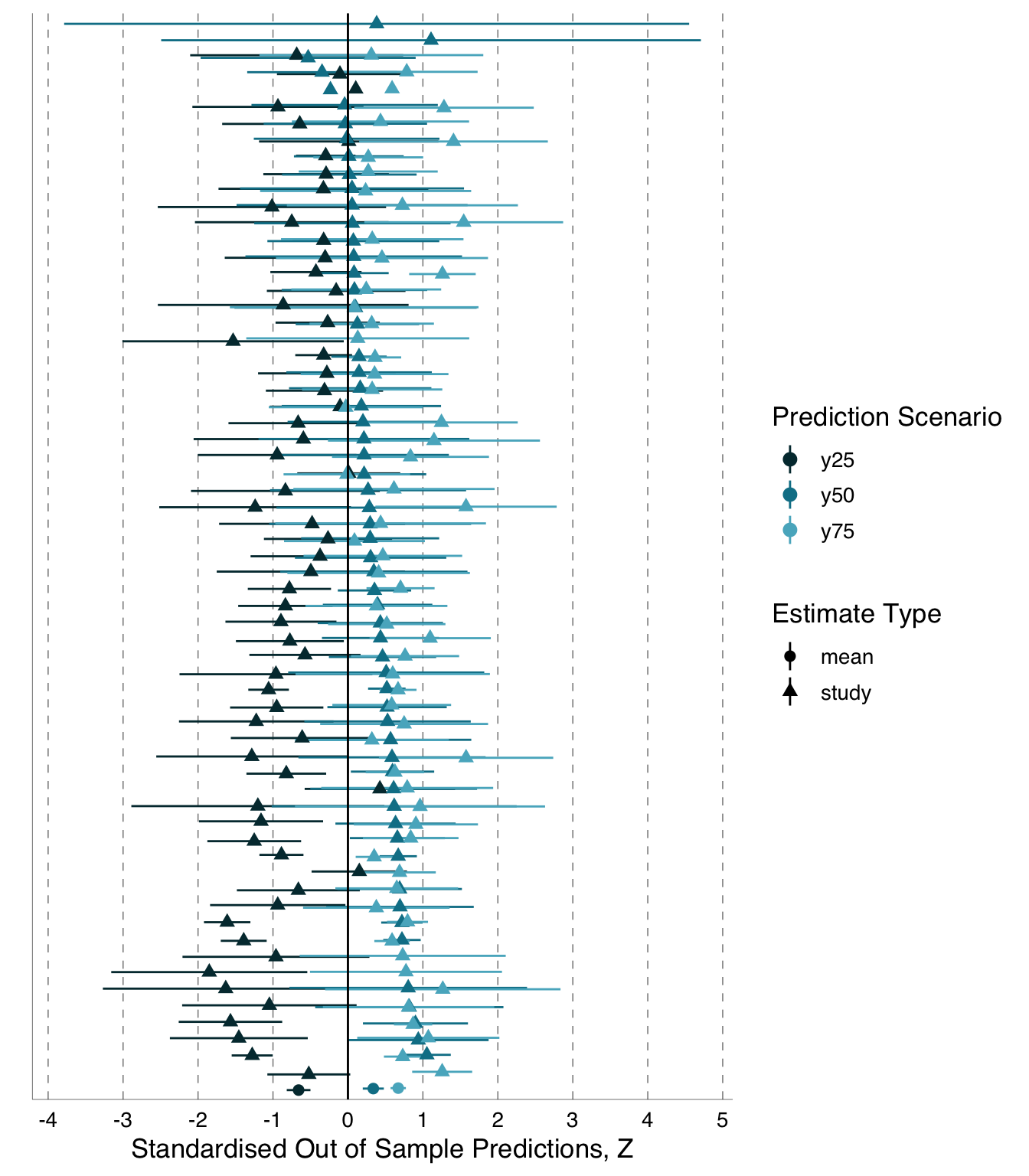

We plotted individual effect size estimates (\(Z_r\)) and predicted values of the dependent variable (\(y_i\)) and their corresponding 95\(\%\)confidence / credible intervals in forest plots to allow visualization of the range and precision of effect size and predicted values. Further, we included these estimates in random effects meta-analyses (Higgins et al. 2003; Borenstein et al. 2017) using the metafor package in R (Viechtbauer 2010; R Core Team 2024):

\[ Z_r \sim 1 + \left(1 \vert \text{analysisID} \right) \tag{2.5}\]

\[ y_i \sim 1 + \left(1 \vert \text{analysisID} \right) \tag{2.6}\]

where \(y_i\) is the predicted value for the dependent variable at the 25th percentile, median, or 75th percentile of the independent variables. The individual \(Z_r\) effect sizes were weighted with the inverse of sampling variance for \(Z_r\). The individual predicted values for dependent variable (\(y_i\)) were weighted by the inverse of the associated \(\text{SE}^2\) (original registration omitted “inverse of the” in error). These analyses provided an average \(Z_r\) score or an average \(y_i\) with corresponding 95\(\%\)confidence interval and allowed us to estimate two heterogeneity indices, \(\tau^2\) and \(I^2\). The former, \(\tau^2\), is the absolute measure of heterogeneity or the between-study variance (in our case, between-effect variance) whereas \(I^2\) is a relative measure of heterogeneity. We obtained the estimate of relative heterogeneity (\(I^2\)) by dividing the between-effect variance by the sum of between-effect and within-effect variance (sampling error variance). \(I^2\) is thus, in a standard meta-analysis, the proportion of variance that is due to heterogeneity as opposed to sampling error. When calculating \(I^2\), within-study variance is amalgamated across studies to create a “typical” within-study variance which serves as the sampling error variance (Higgins et al. 2003; Borenstein et al. 2017). Our goal here was to visualize and quantify the degree of variation among analyses in effect size estimates (Nakagawa and Cuthill 2007). We did not test for statistical significance.

Our use of \(I^{2}\) to quantify heterogeneity violates an important assumption, but this violation does not invalidate our use of \(I^{2}\) as a metric of how much heterogeneity can derive from analytical decisions. In standard meta-analysis, the statistic \(I^{2}\) quantifies the proportion of variance that is greater than we would expect if differences among estimates were due to sampling error alone (Rosenberg 2013). However, it is clear that this interpretation does not apply to our value of \(I^{2}\) because \(I^{2}\) assumes that each estimate is based on an independent sample (although these analyses can account for non-independence via hierarchical modelling), whereas all our effects were derived from largely or entirely overlapping subsets of the same dataset. Despite this, we believe that \(I^{2}\) remains a useful statistic for our purposes. This is because, in calculating \(I^{2}\), we are still setting a benchmark of expected variation due to sampling error based on the variance associated with each separate effect size estimate, and we are assessing how much (if at all) the variability among our effect sizes exceeds what would be expected had our effect sizes been based on independent data. In other words, our estimates can tell us how much proportional heterogeneity is possible from analytical decisions alone when sample sizes (and therefore meta-analytic within-estimate variance) are similar to the ones in our analyses. Among other implications, our violation of the independent sample assumption means that we (dramatically) over-estimate the variance expected due to sampling error, and because \(I^{2}\) is a proportional estimate, we thus underestimate the actual proportion of variance due to differences among analyses other than sampling error. However, correcting this underestimation would create a trivial value since we designed the study so that much of the variance would derive from analytic decisions as opposed to differences in sampled data. Instead, retaining the \(I^{2}\) value as typically calculated provides a useful comparison to \(I^{2}\) values from typical meta-analyses.

Interpretation of \(\tau^2\) also differs somewhat from traditional meta-analysis, and we discuss this further in the Results.

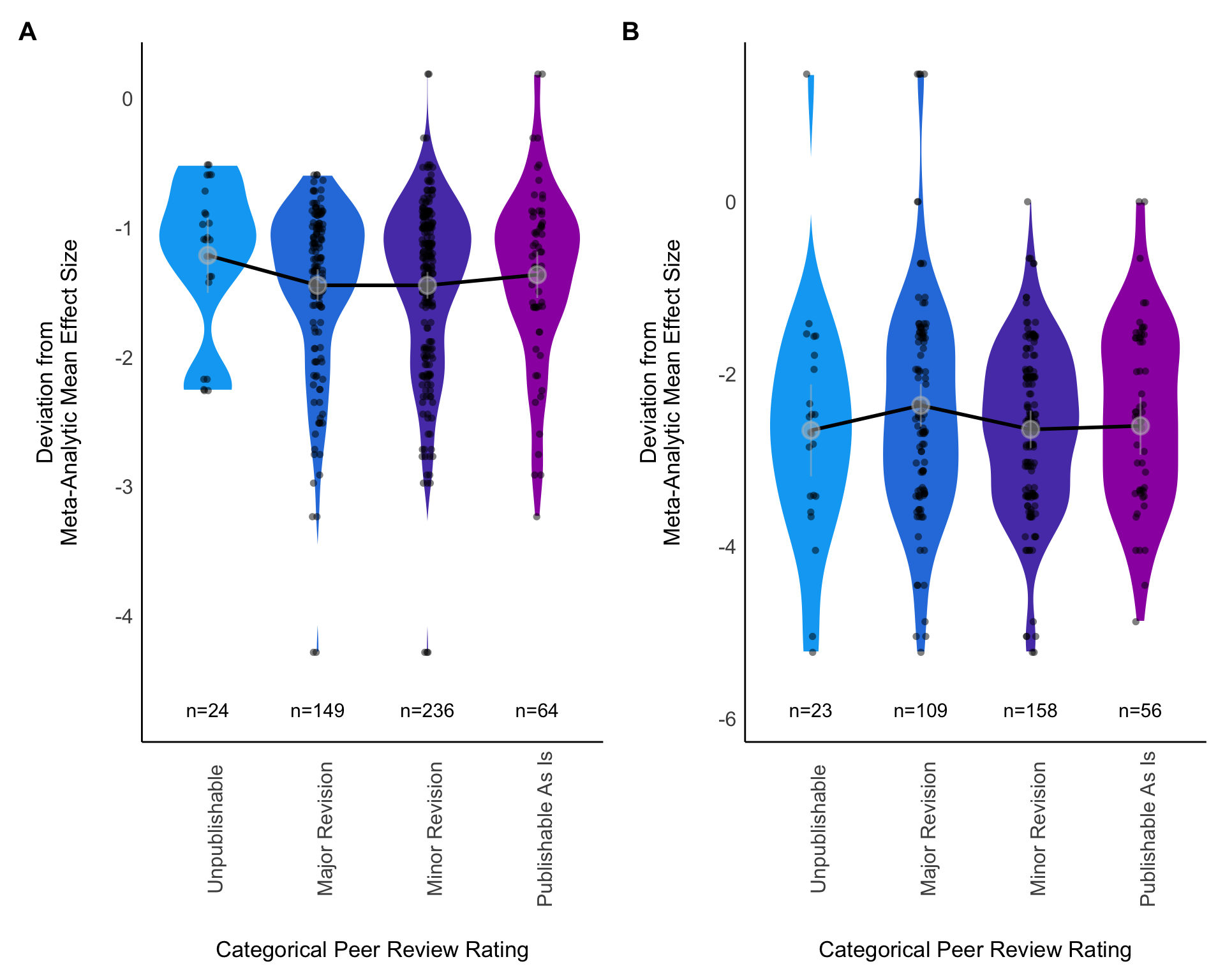

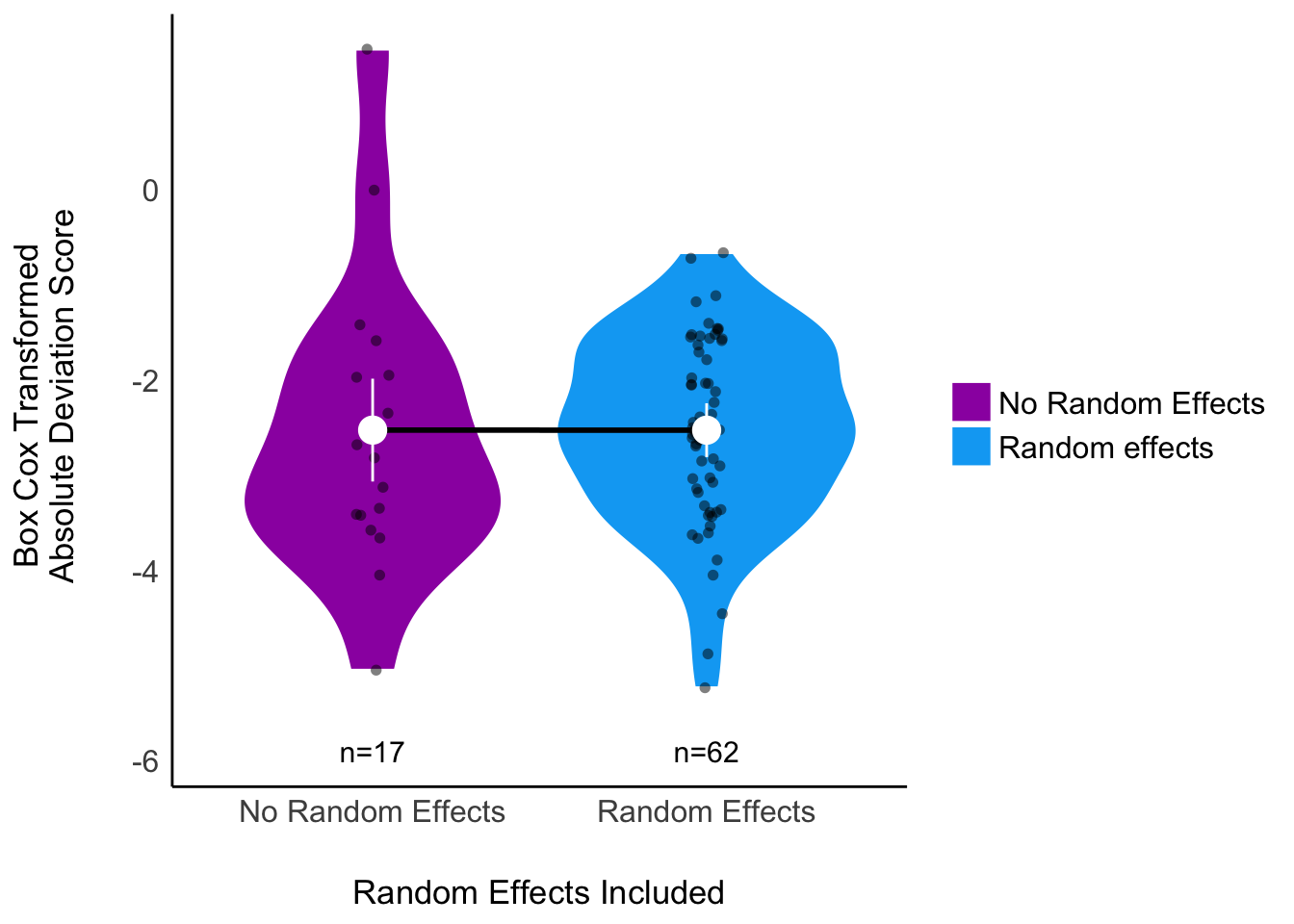

Finally, we assessed the extent to which deviations from the meta-analytic mean by individual effect sizes (\(Z_r\)) or the predicted values of the dependent variable (\(y_i\)) were explained by the peer rating of each analysis team’s method section, by a measurement of the distinctiveness of the set of predictor variables included in each analysis, and by the choice of whether or not to include random effects in the model. The deviation score, which served as the dependent variable in these analyses, is the absolute value of the difference between the meta-analytic mean \(\bar{Z_r}\) (or \(\bar{y_i}\)) and the individual \(Z_r\) (or \(y_i\)) estimate for each analysis. We used the Box-Cox transformation on the absolute values of deviation scores to achieve an approximately normal distribution (c.f. Fanelli and Ioannidis 2013; Fanelli, Costas, and Ioannidis 2017). We described variation in this dependent variable with both a series of univariate analyses and a multivariate analysis. All these analyses were general linear (mixed) models. These analyses were secondary to our estimation of variation in effect sizes described above. We wished to quantify relationships among variables, but we had no a priori expectation of effect size and made no dichotomous decisions about statistical significance.

In our meta-analyses based on Box-Cox transformed deviation scores, we leave these deviation scores unweighted. This is consistent with our registration, which did not mention weighting these scores. However, the fact that we did not mention weighting the scores was actually an error: we had intended to weight them, as is standard in meta-analysis, using the inverse variance of the Box-Cox transformed deviation scores Equation C.1. Unfortunately, when we did conduct the weighted analyses, they produced results in which some weighted estimates differed radically from the unweighted estimate because the weights were invalid. Such invalid weights can sometimes occur when the variance (upon which the weights depend) is partly a function of the effect size, as in our Box-Cox transformed deviation scores (Nakagawa et al. 2022). In the case of the Eucalyptus analyses, the most extreme outlier was weighted much more heavily (by close to two orders of magnitude) than any other effect sizes because the effect size was, itself, so high. Therefore, we made the decision to avoid weighting by inverse variance in all analyses of the Box-Cox transformed deviation scores. This was further justified because (a) most analyses have at least some moderately unreliable weights, and (b) the sample sizes were mostly very similar to each other across submitted analyses, and so meta-analytic weights are not particularly important here. We systematically investigated the impact of different weighting schemes and random effects on model convergence and results, see Section C.8 for more details.

When examining the extent to which reviewer ratings (on a scale from 0 to 100) explained deviation from the average effect (or predicted value), each analysis had been rated by multiple peer reviewers, so for each reviewer score to be included, we include each deviation score in the analysis multiple times. To account for the non-independence of multiple ratings of the same analysis, we planned to include analysis identity as a random effect in our general linear mixed model in the lme4 package in R (Bates et al. 2015; R Core Team 2024). To account for potential differences among reviewers in their scoring of analyses, we also planned to include reviewer identity as a random effect:

\[ \begin{alignat*}{2} {\mathrm{DeviationScore}_{j}} &=&& \mathrm{BoxCox}(|\mathrm{DeviationFromMean}_{j}|) \\ {\mathrm{DeviationScore}}_{ij} & \sim &&\mathrm{Rating}_{ij} + \\ & &&\mathrm{ReviewerID}_{i} + \\ & && {\mathrm{AnalysisID}}_{j} \\ {\mathrm{ReviewerID}}_i &\sim &&\mathcal{N}(0,\sigma_i^2) \\ {\mathrm{AnalysisID}}_j &\sim &&\mathcal{N}(0,\sigma_j^2) \\ \end{alignat*} \tag{2.7}\]

Where \(\text{DeviationFromMean}_{j}\) is the deviation from the meta-analytic mean for the \(j\)th analysis, \(\text{ReviewerID}_{i}\) is the random intercept assigned to each \(i\) reviewer, and \(\text{AnalysisID}_{j}\) is the random intercept assigned to each \(j\) analysis, both of which are assumed to be normally distributed with a mean of 0 and a variance of \(\sigma^{2}\). Absolute deviation scores were Box-Cox transformed using the step_box_cox() function from the timetk package in R (Dancho and Vaughan 2023; R Core Team 2024).

We conducted a similar analysis with the four categories of reviewer ratings ((1) deeply flawed and unpublishable, (2) publishable with major revision, (3) publishable with minor revision, (4) publishable as is) set as ordinal predictors numbered as shown here. As with the analyses above, we planned for these analyses to also include random effects of analysis identity and reviewer identity. Both of these analyses (1: 1-100 ratings as the fixed effect, 2: categorical ratings as the fixed effects) were planned to be conducted eight times for each dataset. Each of the four responses (\(Z_r\), \(y_{25th}\), \(y_{50th}\), \(y_{75th}\)) were to be compared once to the initial ratings provided by the peer reviewers, and again based on the revised ratings provided by the peer reviewers.

We planned to include random effects of both analysis identity and reviewer identity in these models comparing reviewer ratings with deviation scores. However, after we received the analyses, we discovered that a subset of analyst teams had either conducted multiple analyses and/or identified multiple effects per analysis as answering the target question. We therefore faced an even more complex potential set of random effects. We decided that including team ID and effect ID along with reviewer ID as random effects in the same model would almost certainly lead to model fit problems, and so we started with simpler models including just effect ID and reviewer ID. However, even with this simpler structure, our dataset was sparse, with reviewers rating a small number of analyses, resulting in models with singular fit (Section C.2). Removing one of the random effects was necessary for the models to converge. For both models of deviation from the meta-analytic mean explained by categorical or continuous reviewer ratings, we removed the random effect of effect ID, leaving reviewer ID as the only random effect.

We conducted analyses only with the final peer ratings after the opportunity for revision, not with the initial ratings. This was because when we recorded the final ratings, the initial ratings were over-written, therefore we did not have access to those initial values.

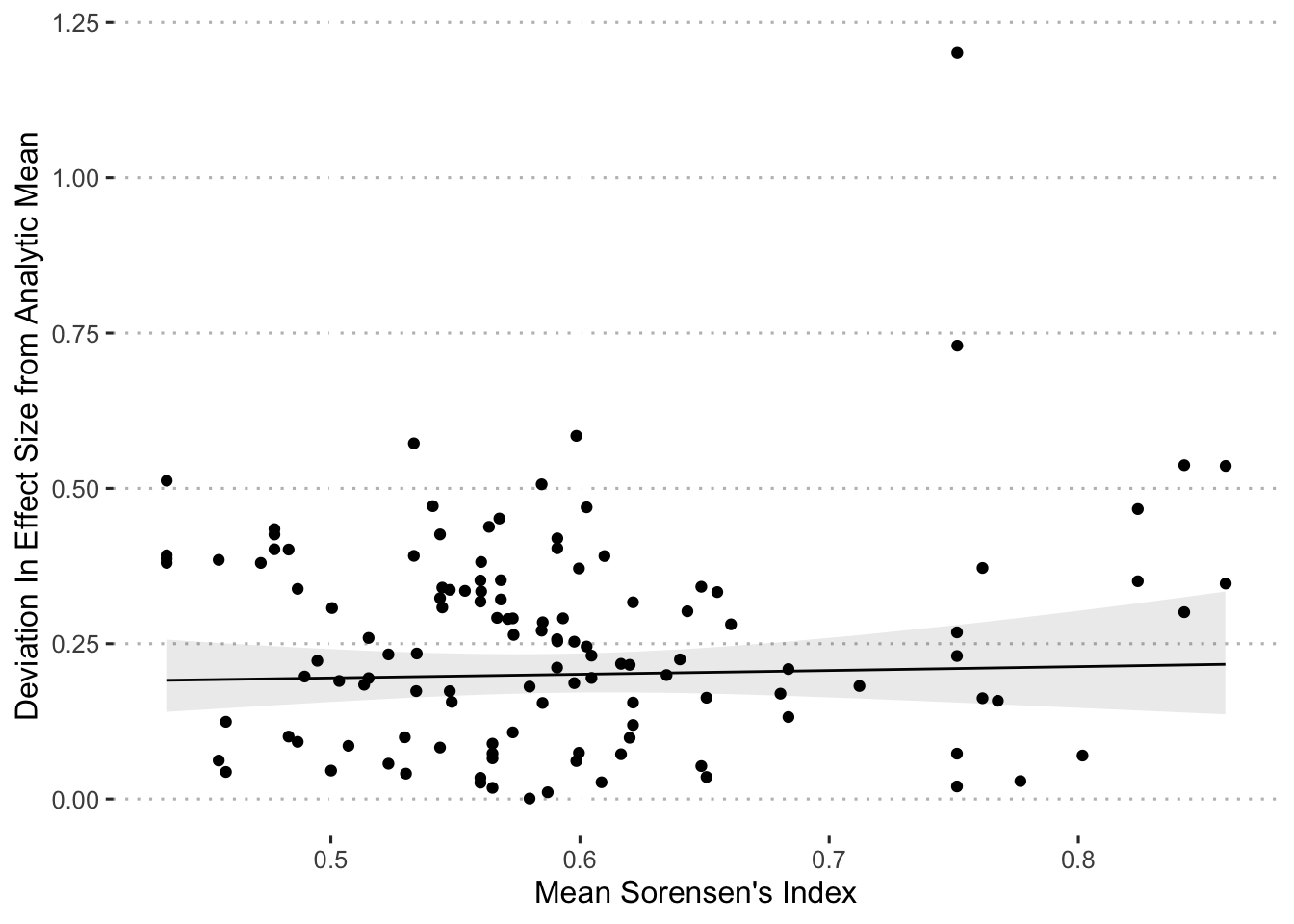

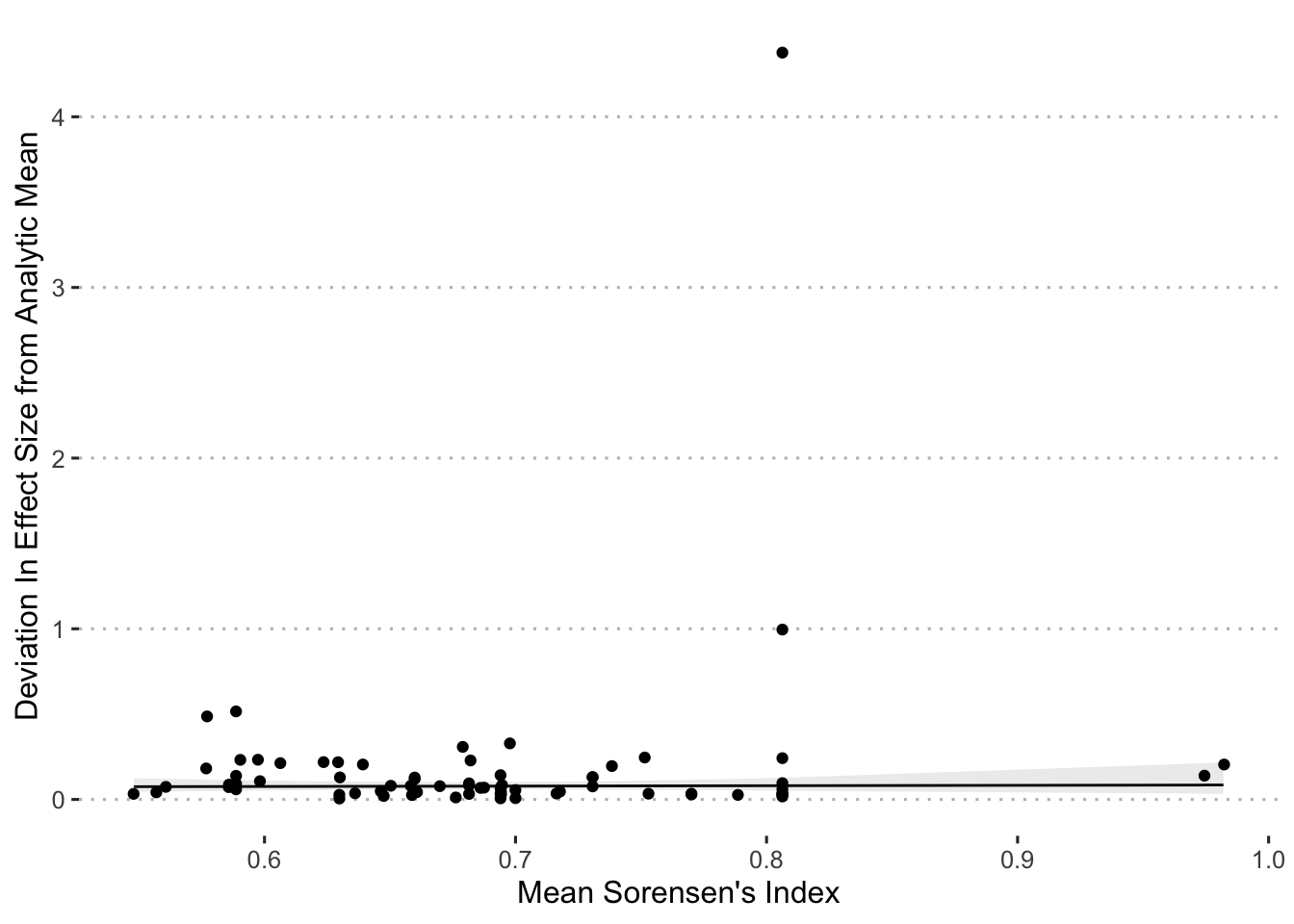

The next set of univariate analyses sought to explain deviations from the mean effects based on a measure of the distinctiveness of the set of variables included in each analysis. As a ‘distinctiveness’ score, we used Sorensen’s Similarity Index (an index typically used to compare species composition across sites), treating variables as species and individual analyses as sites. To generate an individual Sorensen’s value for each analysis required calculating the pairwise Sorensen’s value for all pairs of analyses (of the same dataset), and then taking the average across these Sorensen’s values for each analysis. We calculated the Sorensen’s index values using the betapart package (Baselga et al. 2023) in R:

\[ \beta_{\mathrm{Sorensen}} = \frac{b+c}{2a+b+c} \tag{2.8}\]

where \(a\) is the number of variables common to both analyses, \(b\) is the number of variables that occur in the first analysis but not in the second and \(c\) is the number of variables that occur in the second analysis. We then used the per-model average Sorensen’s index value as an independent variable to predict the deviation score in a general linear model, and included no random effect since each analysis is included only once, in R (R Core Team 2024):

\[ \mathrm{DeviationScore}_{j} \sim \beta_{\mathrm{Sorensen}_{j}} \tag{2.9}\]

When we planned this analysis, we anticipated that analysts would identify a single primary effect from each model, so that each model would appear in the analysis only once. Our expecation was incorrect because some analysts identified >1 effect per analysis, but we still chose to specify our model as registered and not use a random effect. This is because most models produced only one effect and so we expected that specifying a random effect to account for the few cases where >1 effect was included for a given model would prevent model convergence.

Note that this analysis contrasts with the analyses in which we used reviewer ratings as predictors because in the analyses with reviewer ratings, each effect appeared in the analysis approximately four times due to multiple reviews of each analysis, and so it was much more important to account for that variance through a random effect.

Next, we assessed the relationship between the inclusion of random effects in the analysis and the deviation from the mean effect size. We anticipated that most analysts would use random effects in a mixed model framework, but if we were wrong, we wanted to evaluate the differences in outcomes when using random effects versus not using random effects. Thus if there were at least 5 analyses that did and 5 analyses that did not include random effects, we would add a binary predictor variable “random effects included (yes/no)” to our set of univariate analyses and would add this predictor variable to our multivariate model described below. This standard was only met for the Eucalyptus analyses, and so we only examined inclusion of random effects as a predictor variable in meta-analysis of this set to analyses.

Finally, we conducted a multivariate analysis with the five predictors described above (peer ratings 0-100 and peer ratings of publishability 1-4; both original and revised and Sorensen’s index, plus a sixth for Eucalyptus, presence /absence of random effects) with random effects of analysis identity and reviewer identity in the lme4 package in R (Bates et al. 2015; R Core Team 2024). We had stated here in the text that we would use only the revised (final) peer ratings in this analysis, so the absence of the initial ratings is not a deviation from our plan:

\[ \begin{alignat*}{3} {\mathrm{DeviationScore}_{j}} &=&& \mathrm{BoxCox}(|\mathrm{DeviationFromMean}_{j}|) \\ {\mathrm{DeviationScore}}_{ij} &\sim && {\mathrm{RatingContinuous}}_{ij} + \\ & && {\mathrm{RatingCategorical}}_{ij} + \\ & && {\beta_\mathrm{Sorensen}}_{j} + \\ & && {\mathrm{AnalysisID}}_{j} + \\ & && {\mathrm{ReviewerID}}_{i} \\ {\mathrm{ReviewerID}}_{i} &\sim &&\mathcal{N}(0,\sigma_i^2) \\ {\mathrm{AnalysisID}}_{j} &\sim &&\mathcal{N}(0,\sigma_j^2) \end{alignat*} \tag{2.10}\]

We conducted all the analyses described above eight times; for each of the four responses (\(Z_r\), \(y_{25th}\), \(y_{50th}\), \(y_{75th}\)) one time for each of the two datasets.

We have publicly archived all relevant data, code, and materials on the Open Science Framework (https://osf.io/mn5aj/). Archived data includes the original datasets distributed to all analysts, any edited versions of the data analyzed by individual groups, and the data we analyzed with our meta-analyses, which include the effect sizes derived from separate analyses, the statistics describing variation in model structure among analyst groups, and the anonymized answers to our surveys of analysts and peer reviewers. Similarly, we have archived both the analysis code used for each individual analysis (where available) and the code from our meta-analyses. We have also archived copies of our survey instruments from analysts and peer reviewers.

Our rules for excluding data from our study were as follows. We excluded from our synthesis any individual analysis submitted after we had completed peer review or those unaccompanied by analysis files that allow us to understand what the analysts did. We also excluded any individual analysis that did not produce an outcome that could be interpreted as an answer to our primary question (as posed above) for the respective dataset. For instance, this means that in the case of the data on blue tit chick growth, we excluded any analysis that did not include something that can be interpreted as growth or size as a dependent (response) variable, and in the case of the Eucalyptus establishment data, we excluded any analysis that did not include a measure of grass cover among the independent (predictor) variables. Also, as described above, any analysis that could not produce an effect that could be converted to a signed \(Z_r\) was excluded from analyses of \(Z_r\).

Some analysts had difficulty implementing our instructions to derive the out-of-sample predictions, and in some cases (especially for the Eucalyptus data), they submitted predictions with implausibly extreme values. We believed these values were incorrect and thus made the conservative decision to exclude out-of-sample predictions where the estimates were > 3 standard deviations from the mean value from the full dataset provided to teams for analysis.

1. Evaluating model fit.

We evaluated all fitted models using the performance::performance() function from the performance package (Lüdecke, Ben-Shachar, et al. 2021) and the glance() function from the broom.mixed package (Bolker et al. 2024). For all models, we calculated the square root of the residual variance (Sigma) and the root mean squared error (RMSE). For GLMMs performance::performance() calculates the marginal and conditional \(R^2\) values as well as the contribution of random effects (ICC), based on Nakagawa et al. (2017). The conditional \(R^2\) accounts for both the fixed and random effects, while the marginal \(R^2\) considers only the variance of the fixed effects. The contribution of random effects is obtained by subtracting the marginal \(R^2\) from the conditional \(R^2\).

2. Exploring outliers and analysis quality.

After seeing the forest plots of \(Z_r\) values and noticing the existence of a small number of extreme outliers, especially from the Eucalyptus analyses, we wanted to understand the degree to which our heterogeneity estimates were influenced by these outliers. To explore this question, we removed the highest two and lowest two values of \(Z_r\) in each dataset and re-calculated our heterogeneity estimates.

To help understand the possible role of the quality of analyses in driving the heterogeneity we observed among estimates of \(Z_r\), we created forest plots and recalculated our heterogeneity estimates after removing all effects from analysis teams that had received at least one rating of “deeply flawed and unpublishable” and then again after removing all effects from analysis teams with at least one rating of either “deeply flawed and unpublishable” or “publishable with major revisions”. We also used self-identified levels of statistical expertise to examine heterogeneity when we retained analyses only from analysis teams that contained at least one member who rated themselves as “highly proficient” or “expert” (rather than “novice” or “moderately proficient”) in conducting statistical analyses in their research area in our intake survey.

Additionally, to assess potential impacts of highly collinear predictor variables on estimates of \(Z_r\) in blue tit analyses, we created forest plots (Figure B.5) and recalculated our heterogeneity estimates after we removed analyses that contained the brood count after manipulation and the highly correlated (correlation of 0.89, Figure D.2) brood count at day 14. This removal included the one effect based on a model that contained both these variables and a third highly correlated variable, the estimate of number of chicks fledged (the only model that included the estimate of number of chicks fledged). We did not conduct a similar analysis for the Eucalyptus dataset because there were no variables highly collinear with the primary predictors (grass cover variables) in that dataset (Figure D.1).

3. Exploring possible impacts of lower quality estimates of degrees of freedom.

Our meta-analyses of variation in \(Z_r\) required variance estimates derived from estimates of the degrees of freedom in original analyses from which \(Z_r\) estimates were derived. While processing the estimates of degrees of freedom submitted by analysts, we identified a subset of these estimates in which we had lower confidence because two or more effects from the same analysis were submitted with identical degrees of freedom. We therefore conducted a second set of (more conservative) meta-analyses that excluded these \(Z_r\) estimates with identical estimates of degrees of freedom and we present these analyses in the supplement.

After we initiated our project, a paper was published outlining best practices in many-analysts studies (Aczel et al. 2021). Although we did not have access to this document when we implemented our project, our study complies with these practices nearly completely. The one exception is that although we requested analysis code from analysts, we did not require submission of code.

2.6 Step 6: Facilitated Discussion and Collaborative Write-Up of Manuscript

We planned for analysts and initiating authors to discuss the limitations, results, and implications of the study and collaborate on writing the final manuscript for review as a stage-2 Registered Report.

As described above, due to the large number of recruited analysts and reviewers and the anticipated challenges of receiving and integrating feedback from so many authors, we limited analyst and reviewer participation in the production of the final manuscript to an invitation to call attention to serious problems with the manuscript draft.

We built an R package, ManyEcoEvo:: to conduct the analyses described in this study (Gould et al. 2023), which can be downloaded from https://github.com/egouldo/ManyEcoEvo/ to reproduce our analyses or replicate the analyses described here using alternate datasets. Data cleaning and preparation of analysis-data, as well as the analysis, is conducted in R (R Core Team 2024) reproducibly using the targets package (Landau 2021). This data and analysis pipeline is stored in the ManyEcoEvo:: package repository and its outputs are made available to users of the package when the library is loaded.

The full manuscript, including further analysis and presentation of results is written in Quarto (Allaire, Teague, et al. 2024). The source code to reproduce the manuscript is hosted at https://github.com/egouldo/ManyAnalysts/ (Gould et al. 2024), and the rendered version of the source code may be viewed at https://egouldo.github.io/ManyAnalysts/. All R packages and their versions used in the production of the manuscript are listed at Section 6.6.

3 Results

3.1 Summary Statistics

In total, 173 analyst teams, comprising 246 analysts, contributed 182 usable analyses (compatible with our meta-analyses and provided with all information needed for inclusion) of the two datasets examined in this study which yielded 215 effects. Analysts produced 134 distinct effects that met our criteria for inclusion in at least one of our meta-analyses for the blue tit dataset. Analysts produced 81 distinct effects meeting our criteria for inclusion for the Eucalyptus dataset. Excluded analyses and effects either did not answer our specified biological questions, were submitted with insufficient information for inclusion in our meta-analyses, or were incompatible with production of our effect size(s). We expected cases of this final scenario (incompatible analyses), for instance we cannot extract a \(Z_r\) from random forest models, which is why we analyzed two distinct types of effects, \(Z_r\) and out-of-sample. Some effects only provided sufficient information for a subset of analyses and were only included in that subset. For both datasets, most submitted analyses incorporated mixed effects. Submitted analyses of the blue tit dataset typically specified normal error and analyses of the Eucalyptus dataset typically specified a non-normal error distribution (Table A.1).

For both datasets, the composition of models varied substantially in regards to the number of fixed and random effects, interaction terms, and the number of data points used, and these patterns differed somewhat between the blue tit and Eucalyptus analyses (See Table A.2). Focussing on the models included in the \(Z_r\) analyses (because this is the larger sample), blue tit models included a similar number of fixed effects on average (mean 5.2 \(\pm\) 2.92 \(\text{SD}\), range: 1 to 19) as Eucalyptus models (mean 5.01 \(\pm\) 3.83 \(\text{SD}\), range: 1 to 13), but the standard deviation in number of fixed effects was somewhat larger in the Eucalyptus models. The average number of interaction terms was much larger for the blue tit models (mean 0.44 \(\pm\) 1.11 \(\text{SD}\), range: 0 to 10) than for the Eucalyptus models (mean 0.16 \(\pm\) 0.65 \(\text{SD}\), range: 0 to 5), but still under 0.5 for both, indicating that most models did not contain interaction terms. Blue tit models also contained more random effects (mean 3.53 \(\pm\) 2.08 \(\text{SD}\), range: 0 to 10) than Eucalyptus models (mean 1.41 \(\pm\) 1.09 \(\text{SD}\), range: 0 to 4). The maximum possible sample size in the blue tit dataset (3720 nestlings) was an order of magnitude larger than the maximum possible in the Eucalyptus dataset (351 plots), and the means and standard deviations of the sample size used to derive the effects eligible for our study were also an order of magnitude greater for the blue tit dataset (mean 2611.09 \(\pm\) 937.48 \(\text{SD}\), range: 76 to 76) relative to the Eucalyptus models (mean 298.43 \(\pm\) 106.25 \(\text{SD}\), range: 18 to 351). However, the standard deviation in sample size from the Eucalyptus models was heavily influenced by a few cases of dramatic sub-setting (described below). Approximately three quarters of Eucalyptus models used sample sizes within 3\(\%\) of the maximum. In contrast, fewer than 20\(\%\) of blue tit models relied on sample sizes within 3\(\%\) of the maximum, and approximately 50\(\%\) of blue tit models relied on sample sizes 29\(\%\) or more below the maximum.

Analysts provided qualitative descriptions of the conclusions of their analyses. Each analysis team provided one conclusion per dataset. These conclusions could take into account the results of any formal analyses completed by the team as well as exploratory and visual analyses of the data. Here we summarize all qualitative responses, regardless of whether we had sufficient information to use the corresponding model results in our quantitative analyses below. We classified these conclusions into the categories summarized below (Table 3.1):

- Mixed: some evidence supporting a positive effect, some evidence supporting a negative effect

- Conclusive negative: negative relationship described without caveat

- Qualified negative: negative relationship but only in certain circumstances or where analysts express uncertainty in their result

- Conclusive none: analysts interpret the results as conclusive of no effect

- None qualified: analysts describe finding no evidence of a relationship but they describe the potential for an undetected effect

- Qualified positive: positive relationship described but only in certain circumstances or where analysts express uncertainty in their result

- Conclusive positive: positive relationship described without caveat

For the blue tit dataset, most analysts concluded that there was negative relationship between measures of sibling competition and nestling growth, though half the teams expressed qualifications or described effects as mixed or absent. No analysts concluded that there was a positive relationship even though some individual effect sizes were positive, apparently because all analysts who produced effects indicating positive relationships also produced effects indicating negative relationships and therefore described their results as qualified, mixed, or absent. For the Eucalyptus dataset, there was a broader spread of conclusions with at least one analyst team providing conclusions consistent with each conclusion category. The most common conclusion for the Eucalyptus dataset was that there was no relationship between grass cover and Eucalyptus recruitment (either conclusive or qualified description of no relationship), but more than half the teams concluded that there were effects; negative, positive, or mixed.

| Dataset | Mixed | Negative Conclusive | Negative Qualified | None Conclusive | None Qualified | Positive Conclusive | Positive Qualified |

|---|---|---|---|---|---|---|---|

| blue tit | 5 | 37 | 27 | 4 | 1 | 0 | 0 |

| Eucalyptus | 8 | 6 | 12 | 19 | 12 | 2 | 4 |

3.2 Distribution of Effects

3.2.1 Standardized Effect Sizes (\(Z_r\))

Although the majority (118 of 131) of the usable \(Z_r\) effects from the blue tit dataset found nestling growth decreased with sibling competition, and the meta-analytic mean \(\bar{Z_r}\) (Fisher’s transformation of the correlation coefficient) was convincingly negative (-0.35 \(\pm\) 0.06 95\(\%\)CI), there was substantial variability in the strength and the direction of this effect. \(Z_r\) ranged from -1.55 to 0.38, and approximately continuously from -0.93 to 0.19 ( Figure 3.1 (a) and Table 3.4), and of the 118 effects with negative slopes, 93 had confidence intervals exluding 0. Of the 13 with positive slopes indicating increased nestling growth in the presence of more siblings, 2 had confidence intervals excluding zero (Figure 3.1 (a)).

Meta-analysis of the Eucalyptus dataset also showed substantial variability in the strength of effects as measured by \(Z_r\), and unlike with the blue tits, a notable lack of consistency in the direction of effects (Figure 3.1 (b), Table 3.4). \(Z_r\) ranged from -4.47 (Figure A.2), indicating a strong tendency for reduced Eucalyptus seedling success as grass cover increased, to 0.39, indicating the opposite. Although the range of reported effects skewed strongly negative, this was due to a small number of substantial outliers. Most values of \(Z_r\) were relatively small with values \(\lt |0.2|\) and the meta-analytic mean effect size was close to zero (-0.09 \(\pm\) 0.12 95\(\%\)CI). Of the 79 effects, fifty-three had confidence intervals overlapping zero, approximately a quarter (fifteen) crossed the traditional threshold of statistical significance indicating a negative relationship between grass cover and seedling success, and eleven crossed the significance threshold indicating a positive relationship between grass cover and seedling success (Figure 3.1 (b)).

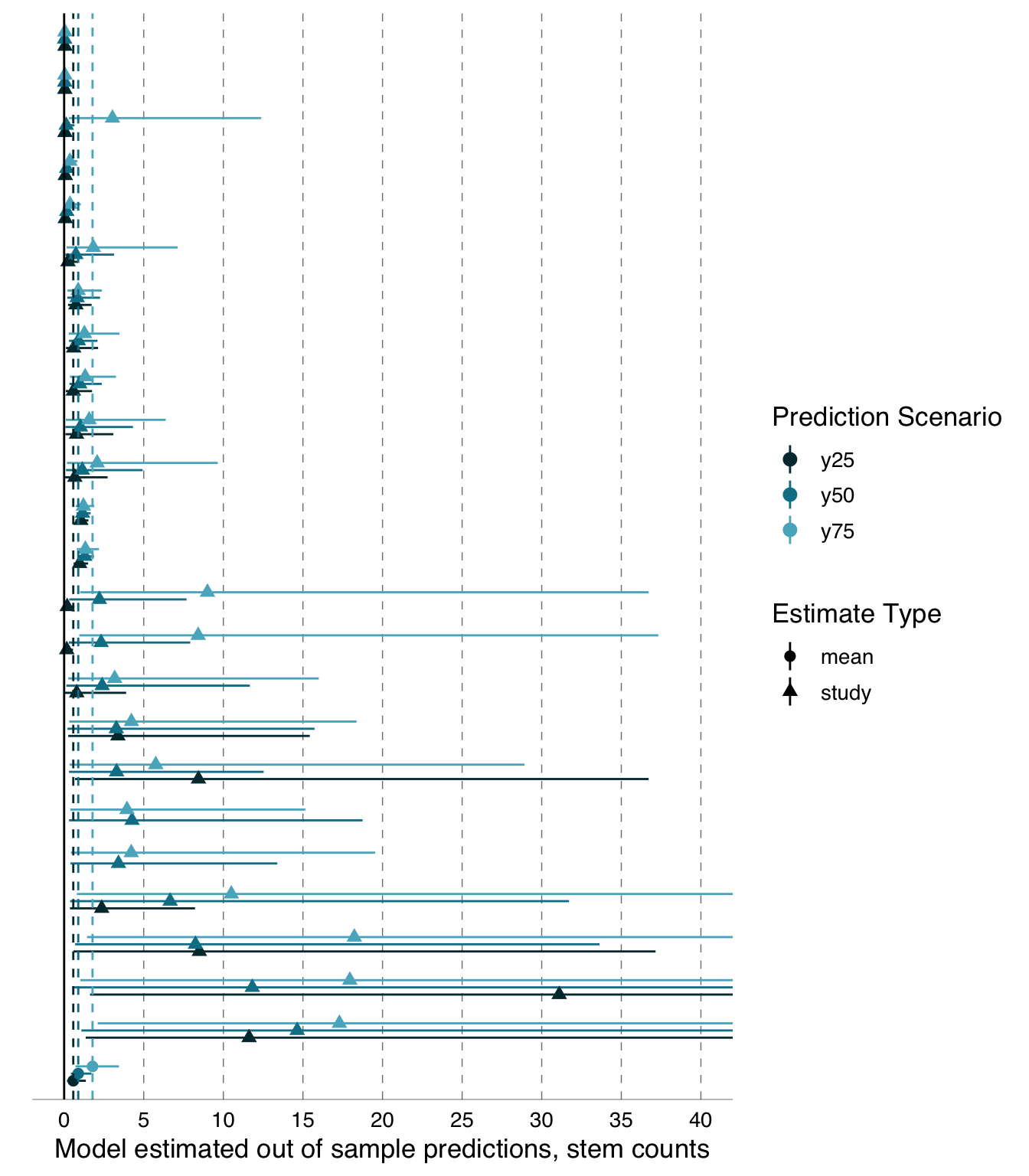

3.2.2 Out-of-sample predictions \(y_{i}\)

As with the effect size \(Z_r\), we observed substantial variability in the size of out-of-sample predictions derived from the analysts’ models. Blue tit predictions (Figure 3.2), which were z-score-standardised to accommodate the use of different response variables, always ranged far in excess of one standard deviation. In the \(y_{25}\) scenario, model predictions ranged from -1.84 to 0.42 (a range of 2.68 standard deviations), in the \(y_{50}\) they ranged from -0.52 to 1.08 (a range of 1.63 standard deviations), and in the \(y_{75}\) scenario they ranged from -0.03 to 1.59 (a range of 1.9 standard deviations). As should be expected given the existence of both negative and positive \(Z_r\) values, all three out-of-sample scenarios produced both negative and positive predictions, although as with the \(Z_r\) values, there is a clear trend for scenarios with more siblings to be associated with smaller nestlings. This is supported by the meta-analytic means of these three sets of predictions which were -0.66 (95\(\%\)CI -0.82–0.5) for the \(y_{25}\), 0.34 (95\(\%\)CI 0.2-0.48) for the \(y_{50}\), and 0.67 (95\(\%\)CI 0.57-0.77) for the \(y_{75}\).

Eucalyptus out-of-sample predictions also varied substantially (Figure 3.3), but because they were not z-score-standardised and are instead on the original count scale, the types of interpretations we can make differ. The predicted Eucalyptus seedling counts per 15 x 15 m plot for the \(y_{25}\) scenario ranged from 0.04 to 26.99, for the \(y_{50}\) scenario ranged from 0.04 to 44.34, and for the \(y_{75}\) scenario they ranged from 0.03 to 61.34. The meta-analytic mean predictions for these three scenarios were similar; 1.27 (95\(\%\)CI 0.59-2.3) for the \(y_{25}\), 2.92 (95\(\%\)CI 0.98-3.89) for the \(y_{50}\), and 2.92 (95\(\%\)CI 1.59-4.9) for the \(y_{75}\) scenarios respectively.

3.3 Quantifying Heterogeneity

3.3.1 Effect Sizes (\(Z_r\))

We quantified both absolute (\(\tau^{2}\)) and relative (\(I^{2}\)) heterogeneity resulting from analytical variation. Both measures suggest that substantial variability among effect sizes was attributable to the analytical decisions of analysts.

The total absolute level of variance beyond what would typically be expected due to sampling error, \(\tau^{2}\) (Table 3.2), among all usable blue tit effects was 0.08 and for Eucalyptus effects was 0.27. This is similar to or exceeding the median value (0.105) of \(\tau^{2}\) found across 31 recent meta-analyses (calculated from the data in Yang et al. 2023). The similarity of our observed values to values from meta-analyses of different studies based on different data suggest the potential for a large portion of heterogeneity to arise from analytical decisions. For further discussion of interpretation of \(\tau^{2}\) in our study, please consult discussion of post hoc analyses below.

Team. \({\tau}_\text{effectID}^{2}\) is the absolute heterogeneity for the random effect effectID nested under Team. effectID is the unique identifier assigned to each individual statistical effect submitted by an analysis team. We nested effectID within analysis team identity (Team) because analysis teams often submitted >1 statistical effect, either because they considered >1 model or because they derived >1 effect per model, especially when a model contained a factor with multiple levels that produced >1 contrast. \({\tau}_\text{Total}^{2}\) is the total absolute heterogeneity. \({I}_\text{Total}^{2}\) is the proportional heterogeneity; the proportion of the variance among effects not attributable to sampling error, \({I}_\text{Team}^{2}\) is the subset of the proportional heterogeneity due to differences among Teams and \({I}_\text{Team, effectID}^{2}\) is subset of the proportional heterogeneity attributable to among-effectID differences.

Dataset |

\[{N}_\text{Obs}\] |

\[{\tau}_\text{Total}^{2}\] |

\[{\tau}_\text{Team}^{2}\] |

\[{\tau}_\text{effectID}^{2}\] |

\[{I^2}_\text{Total}\] |

\[{I^2}_\text{Team}\] |

\[{I^2}_\text{Team, effectID}\] |

|---|---|---|---|---|---|---|---|

| All analyses | |||||||

| Eucalyptus | 79 | 0.27 | 0.02 | 0.25 | 98.59% | 6.89% | 91.70% |

| blue tit | 131 | 0.08 | 0.03 | 0.05 | 97.61% | 36.71% | 60.90% |

| Blue tit analyses containing highly collinear predictors removed | |||||||

| blue tit | 117 | 0.07 | 0.04 | 0.03 | 96.92% | 58.18% | 38.75% |

| All analyses, Outliers removed | |||||||

| Eucalyptus | 75 | 0.01 | 0.00 | 0.01 | 66.19% | 19.25% | 46.94% |

| blue tit | 127 | 0.07 | 0.04 | 0.02 | 96.84% | 64.63% | 32.21% |

| Analyses receiving at least one 'Unpublishable' rating removed | |||||||

| Eucalyptus | 55 | 0.01 | 0.01 | 0.01 | 79.74% | 28.31% | 51.43% |

| blue tit | 109 | 0.08 | 0.03 | 0.05 | 97.52% | 35.68% | 61.84% |

| Analyses receiving at least one 'Unpublishable' and or 'Major Revisions' rating removed | |||||||

| Eucalyptus | 13 | 0.03 | 0.03 | 0.00 | 88.91% | 88.91% | 0.00% |

| blue tit | 32 | 0.14 | 0.01 | 0.13 | 98.72% | 5.17% | 93.55% |

| Analyses from teams with highly proficient or expert data analysts | |||||||

| Eucalyptus | 34 | 0.58 | 0.02 | 0.56 | 99.41% | 3.47% | 95.94% |

| blue tit | 89 | 0.09 | 0.03 | 0.06 | 97.91% | 31.43% | 66.49% |

In our analyses, \(I^{2}\) is a plausible index of how much more variability among effect sizes we have observed, as a proportion, than we would have observed if sampling error were driving variability. We discuss our interpretation of \(I^{2}\) further in the methods, but in short, it is a useful metric for comparison to values from published meta-analyses and provides a plausible value for how much heterogeneity could arise in a normal meta-analysis with similar sample sizes due to analytical variability alone. In our study, total \(I^{2}\) for the blue tit \(Z_r\) estimates was extremely large, at 97.61%, as was the Eucalyptus estimate (98.59% Table 3.2).

Although the overall \(I^{2}\) values were similar for both Eucalyptus and blue tit analyses, the relative composition of that heterogeneity differed. For both datasets, the majority of heterogeneity in \(Z_r\) was driven by differences among effects as opposed to differences among teams, though this was more prominent for the Eucalyptus dataset, where nearly all of the total heterogeneity was driven by differences among effects (91.7%) as opposed to differences among teams (6.89%) (Table 3.2).

3.3.2 Out-of-sample predictions (\(y_{i}\))